Number Of Slots In Hash Table

Outline

For this reason, chained hash tables remain effective even when the number of table entries (N) is much higher than the number of slots. For separate chaining, the worst-case scenario is when all the entries are inserted into the same linked list. The difference between using a good hash function and a bad hash function makes a big difference in practice in the number of records that must be examined when searching or inserting to the table. Technically, any function that maps all possible key values to a slot in the hash table is a hash function. Use multiple hash tables with different hash functions. →On insert, check every table and pick anyone that has a free slot. →If no table has a free slot, evict the element from one of them and then re-hash it find a new location. Look-ups and deletions are always O(1) because only one location per hash table is checked.

- Motivations and Introduction

- Hash Tables with Chaining

- Hash Functions and Universal Hashing

- Open Addressing Strategies

Readings and Screencasts

- CLRS Sections 11.1-11.4. (Exclude 11.5)

- Screencasts 6A, 6B, 6C, 6D (also in Laulima and iTunesU)

Motivations and Introduction

Many applications only need the insert, search and delete operations of a dynamic set. Example: symbol table in acompiler.

Hash tables are an effective approach. Under reasonable assumptions, theyhave O(1) operations, but they can be Θ(n) worst case

Direct Addressing

Hash tables generalize arrays. Let's look at the idea with arraysfirst. Given a key k from a universe U of possible keys, adirect address table stores and retrieves the element in positionk of the array.

Direct addressing is applicable when we can allocate an array with oneelement for every key (i.e., of size U ). It is trivial toimplement:

However, often the space of possible keys is much larger than the number ofactual keys we expect, so it would be wasteful of space (and sometimes notpossible) to allocate an array of size U .

Hash Tables and Functions

Hash tables are also arrays, but typically of size proportional to thenumber of keys expected to be stored (rather than to the number of keys).

If the expected keys K ⊂ U, the Universe of keys, and K issubstantially smaller than U , then hash tables can reduce storage requirementsto Θ( K ).

A hash functionh(k) maps the larger universe U of externalkeys to indices into the array. Given a table of size m with zero-basedindexing (we shall see why this is useful):

- h : U → {0, 1, ..., m-1}.

- We say that khashes to slot h(k).

Collisions

The major issue to deal with in designing and implementing hash tables iswhat to do when the hash function maps multiple keys to the same tableentry.

Collisions may or may not happen when K ≤ m, but definitelyhappens when K > m. (Is there any way to avoid this?)

There are two major approaches: Chaining (the preferred method) and OpenAddressing. We'll look at these and also hash function design.

Hash Tables with Chaining

A simple resolution: Put elements that hash to the same slot into a linkedlist. This is called chaining because we chain elements off the slot ofthe hash table.

Number Of Slots In Hash Tables

- Slot j points to the head of a list of all stored elements that hash to j, or to NIL if there are no such elements.

- Doubly linked lists may be used when deletions are expected to be frequent.

- Sentinels can also be used to simplify the code.

Pseudocode for Chaining

Implementation is simple if you already have implemented linked lists:

Number Of Slots In Hash Table Search

What are the running times for these algorithms? Which can we statedirectly, and what do we need to know to determine the others?

Analysis of Hashing with Chaining

How long does it take to find an element with a given key, or to determinethat there is no such element?

- Analysis is in terms of the load factor α = n/m, where

- n = number of elements in the table

- m = number of slots in the table = number of (possibly empty) linked lists

- The load factor α is the average number of elements per linked list.

- Can have α < 1; α = 1; or α > 1.

- Worst case is when all n keys hash to the same slot.

Why? What happens? Θ(_____?) - Average case depends on how well the hash function distributes the keys among the slots.

Let's analyze averge-case performance under the assumption of simpleuniform hashing: any given element is equally likely to hash into any of them slots:

- For j = 0, 1, ..., m-1, denote the length of list T[j] by nj.

- Then n = n0 + n1 + ... + nm-1.

- Average value of nj is E[nj] = α = n/m.

- Assuming h(k) computed in O(1), so time to search for k depends on length nh(k) of the list T[h(k)].

Consider two cases: Unsuccessful and Successful search. The former analysisis simpler because you always search to the end, but for successful search itdepends on where in T[h(k)] the element with key k will befound.

Unsuccessful Search

Simple uniform hashing means that any key not in the table is equally likelyto hash to any of the m slots.

We need to search to end of the list T[h(k)]. It has expected lengthE[nh(k)] = α = n/m.

Adding the time to compute the hash function gives Θ(1 +α). (We leave in the '1' term for the initial computation of hsince α can be 0, and we don't want to say that the computation takesΘ(0) time).

Successful Search

We assume that the element x being searched for is equally likely tobe any of the n elements stored in the table.

The number of elements examined during a successful search for x is 1more than the number of elements that appear before x in x's list(because we have to search them, and then examine x).

These are the elements inserted after x was inserted (because weinsert at the head of the list).

Need to find on average, over the n elements x in the table,how many elements were inserted into x's list after x wasinserted. Lucky we just studied indicator random variables!

For i = 1, 2, ..., n, let xi be theith element inserted into the table, and let ki =key[xi].

For all i and j, define the indicator random variable:

Xij = I{h(ki) = h(kj)}. (The event that keys ki and kj hash to the same slot.)

Simple uniform hashing implies that Pr{h(ki) =h(kj)} = 1/m(Why?)

Therefore, E[Xij] = 1/m by Lemma 1 (Topic #5).

The expected number of elements examined in a successful search is thoseelements j that are inserted after the element i of interestand that end up in the same linked list (Xij):

- The innermost summation is adding up, for all j inserted after i (j=i+1), those that are in the same hash table (when Xij = 1).

- The '1' is for the cost of inserting the element i itself (regardless of whether any j are inserted after it).

- The outermost summation runs this over all n of the keys inserted (indexed by i), and finds the average by dividing by n.

I fill in some of the implicit steps in the rest of the CLRS textanalysis. First, by linearity of expectation we can move the E in:

That is the crucial move: instead of analyzing the probability of complexevents, use indicator random variables to break them down into simple eventsthat we know the probabilities for. In this case we knowE[Xi,j] (if you don't know, ask the lemming above):

Multiplying 1/n by the terms inside the summation,

- For the first term, we get Σi=1,n1/n, which is just n/n or 1

- Move 1/m outside the summation of the second term to get 1/nm. This leaves Σi=1,n(Σj=i+1,n1), which simplifies as shown below (if you added 1 n times, you would overshoot by i).

Splitting the two terms being summed, the first is clearlyn2, and the second is the familiar sum of the first nnumbers:

Distributing the 1/nm, we get1 + (n2/nm - n(n+1)/2nm = 1 + n/m - (n+1)/2m = 1 + 2n/2m - (n+1)/2m, and now we can combine the two fractions:

Now we can turn two instances of n/m into α with this preparation:1 + (n - 1)/2m = 1 + n/2m - 1/2m = 1 + α/2 - n/2mn =

Adding the time (1) for computing the hash function, the expected total timefor a successful search is:

Θ(2 + α/2 - α/2n) = Θ(1 + α).

since the third term vanishes in significance as n grows, and theconstants 2 and 1/2 have Θ(1) growth rate.

Thus, search is an average of Θ(1 + α) in either case.

If the number of elements stored n is bounded within a constant factorof the number of slots m, i.e., n = O(m), then α is aconstant, and search is O(1) on average.

Since insertion takes O(1) worst case and deletion takes O(1) worst case whendoubly linked lists are used, all three operations for hash tables are O(1) onaverage.

We went through that analysis in detail to show again the utilityof indicator random variables and to demonstrate what is possibly the mostcrucial fact of this chapter, but we won't do the other analyses in detail. Withperserverence you can similarly unpack the other analyses.

Hash Functions and Universal Hashing

Ideally a hash function satisfies the assumptions of simple uniformhashing.

This is not possible in practice, since we don't know in advance theprobability distribution of the keys, and they may not be drawn independently.

Instead, we use heuristics based on what we know about the domain of the keysto create a hash function that performs well.

Keys as natural numbers

Hash functions assume that the keys are natural numbers. When they are not, aconversion is needed. Some options:

- Floating point numbers: If an integer is required, sum the mantissa and exponent, treating them as integers.

- Character string: Sum the ASCII or Unicode values of the characters of the string.

- Character string: Interpret the string as an integer expressed in some radix notation. (This gives very large integers.)

Division method

A common hash function: h(k) = k mod m.

(Why does this potentially produce all legal values, and only legalvalues?)

Advantage: Fast, since just one division operation required.

Disadvantage: Need to avoid certain values of m, for example:

- Powers of 2. If m = 2p for integer p then h(k) is the least significant p bits of k.

(There may be a domain pattern that makes the keys clump together). - If character strings are interpreted in radix 2p then m = 2p - 1 is a bad choice: permutations of characters hash the same.

A prime number not too close to an exact power of 2 is a good choice form.

Multiplication method

h(k) = Floor(m(k A mod 1)), where k A mod 1 =fractional part of kA.

- Choose a constant A in range 0 < A < 1.

- Multiply k by A

- Extract the fractional part of kA

- Multiply the fractional part by m

- Take the floor of the result.

Disadvantage: Slower than division.

Advantage: The value of m is not critical.

The book discusses an implementation that we won't get into ...

Universal Hashing

Our malicious adversary is back! He's choosing keys that all hash to the sameslot, giving worst case behavior and gumming up our servers! What to do?

Random algorithms to the rescue: randomly choose a different hash functioneach time you construct and use a new hash table.

But each hash function we choose has to be a good one. Can we define a familyof good candidates?

Consider a finite collection Η of hash functions that map universeU of keys into {0, 1, ..., m-1}.

Η is universal if for each pair of keys k, l ∈U, where k ≠ l, the number of hash functions h ∈ Η forwhich h(k) = h(l) is less than or equal to Η /m (that's thesize of Η divided by m).

In other words, with a hash function h chosen randomly fromΗ, the probability of collision between two different keys is no morethan 1/m, the chance of a collision when choosing two slots randomly andindependently.

Universal hash functions are good because (proven as Theorem 11.3 in text):

- If k is not in the table, the expected length E[nh(k)] of the list that k hashes to is less than or equal to α.

- If k is in the table, the expected length E[nh(k)] of the list that holds k is less than or equal to 1 + α.

Therefore, the expected time for search is O(1).

One candidate for a collection Η of hash functions is:

Η = {hab(k) :hab(k) = ((ak + b) mod p) modm)}, where a ∈ {1, 2, ..., p-1} and b∈ {0, 1, ..., p-1}, where p is prime and larger than thelargest key.

See CLRS for the details, including proof that this provides a universal setof hash functions. Java built in hash functions take care of much of this foryou: read the Java documentation for details.

Open Addressing Strategies

Open Addressing seeks to avoid the extra storage of linked lists by puttingall the keys in the hash table itself.

Of course, we need a way to deal with collisions. If a slot is alreadyoccupied we will apply a systematic strategy for searching for alternativeslots. This same strategy is used in both insertion and search.

Probes and h(k, i)

Examining a slot is called a probe. We need to extend the hashfunction h to take the probe number as a second argument, so thath can try something different on subsequent probes. We count probes from0 to m-1 (you'll see why starting at probe 0 is useful later when wedefine double hashing), so the second argument takes on the same values as theresult of the function:

h : U x {0, 1, ... m-1} → {0, 1, ... m-1}

We require that the probe sequence

⟨ h(k, 0), h(k, 1) ... h(k, m-1) ⟩

be a permutation of ⟨ 0, 1, ... m-1 ⟩. Another way to statethis requirement is that if we have as many probes as positions all thepositions are visited exactly once.

There are three possible outcomes to a probe: k is in the slot probed(successful search); the slot contains NIL (unsuccessful search); or some otherkey is in the slot (need to continue search).

The strategy for this continuation is the crux of the problem, but firstlet's look at the general pseudocode.

Pseudocode

The pseudocode below does not make a committment as to how subsquent probesare handled: that is up to the function h(k, i). Thepseudocode just handles the mechanics of trying until success or an errorcondition is met.

Insertion returns the index of the slot it put the element ink, or throws an error if the table is full:

Search returns either the index of the slot containing element of keyk, or NIL if the search is unsuccessful:

Deletion is a bit complicated. We can't just write NIL into the slotwe want to delete. (Why?)

Instead, we write a special value DELETED. During search, we treat it as ifit were a non-matching key, but insertion treats it as empty and reuses theslot.

Problem: With this approach to deletion, the search time is no longerdependent on α. (Why?)

The ideal is to have uniform hashing, where each key is equally likelyto have any of the m! permutations of ⟨0, 1, ... m-1⟩ asits probe sequence. But this is hard to implement: we try to guarantee that theprobe sequence is some permutation of ⟨0, 1,... m-1⟩.

We will define the hash functions in terms of auxiliary hashfunctions that do the initial mapping, and define the primary function interms of its ith iterations, where 0 ≤ i < m.

Linear Probing

Given an auxiliary hash function h', the probe sequence startsat h'(k), and continues sequentially through the table:

h(k, i) = (h'(k) + i) mod m

Problem:primary clustering: sequences of keys with the sameh' value build up long runs of occupied sequences.

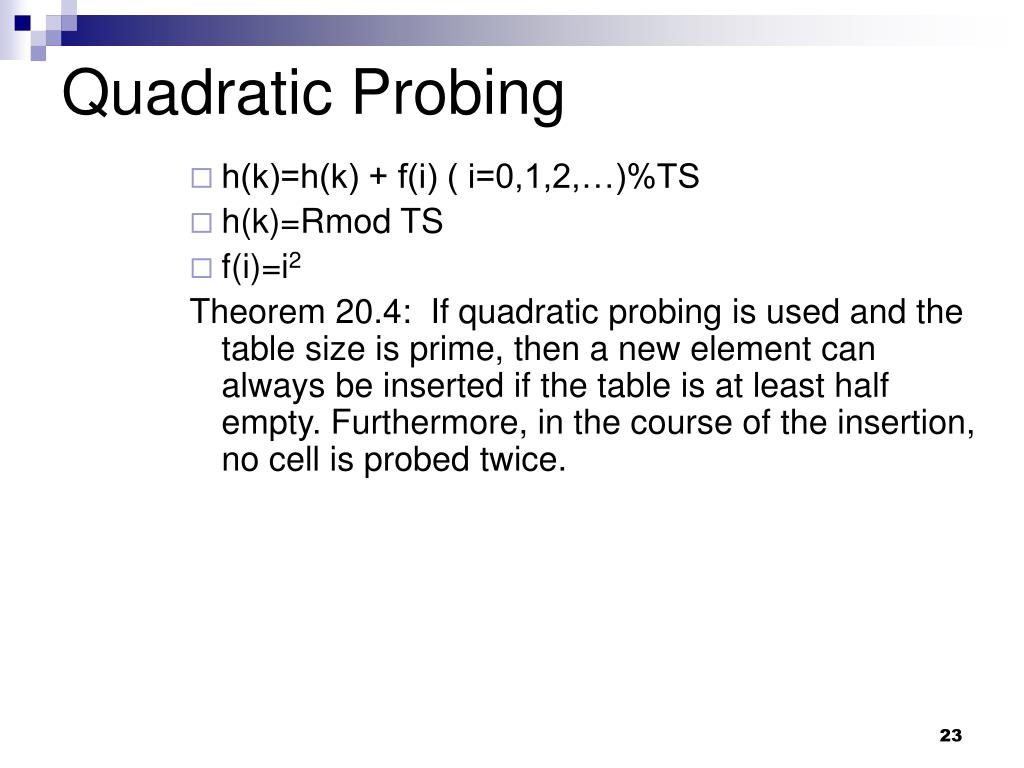

Quadratic Probing

Quadratic probing is attempt to fix this ... instead of reprobing linearly,QP 'jumps' around the table according to a quadratic function of the probe, forexample:

h(k, i) = (h'(k) + c1i +c2i2) mod m,

where c1 and c2 are constants.

Problem:secondary clustering: although primary clusters acrosssequential runs of table positions don't occur, two keys with the same h'may still have the same probe sequence, creating clusters that are broken acrossthe same sequence of 'jumps'.

Double Hashing

A better approach: use two auxiliary hash functions h1 andh2, where h1 gives the initial probe andh2 gives the remaining probes (here you can see that havingi=0 initially drops out the second hash until it is needed):

h(k, i) = (h1(k) + ih2(k)) mod m.

h2(k) must be relatively prime to m(relatively prime means they have no factors in common other than 1) toguarantee that the probe sequence is a full permutation of ⟨0, 1,... m-1⟩. Two approaches:

- Choose m to be a power of 2 and h2 to always produce an odd number > 1.

- Let m be prime and have 1 ≤ h2(k) < m.

(The example figure is h1(k) = k mod 13, and h2(k) = 1 + (k mod 11).)

There are Θ(m2) different probe sequences, since eachpossible combination of h1(k) andh2(k) gives a different probe sequence. This is animprovement over linear or quadratic hashing.

Number Of Slots In Hash Tableau

Analysis of Open Addressing

The textbook develops two theorems you will use to compute the expectednumber of probes for unsuccessful and successful search. (These theorems requireα < 1 because an expression 1/1−α is derived and we don'twant to divide by 0 ... and of course at α = 1 the table is full!)

Theorem 11.6: Given an open-address hash table with load factor α =n/m < 1, the expected number of probes in anunsuccessful search is at most 1/(1 − α),assuming uniform hashing.

Theorem 11.8: Given an open-address hash table with load factor α =n/m < 1, the expected number of probes in asuccessful search is at most (1/α) ln (1/(1 −α)), assuming uniform hashing and assuming that each key in the tableis equally likely to be searched for.

We leave the proofs for the textbook, but note particularly the 'intuitiveinterpretation' in the proof of 11.6 of the expected number ofprobes on page 275:

E[X] = 1/(1-α) = 1 + α + α2 + α3 + ...

We always make the first probe (1). With probability α < 1, thefirst probe finds an occupied slot, so we need to probe a second time(α). With probability α2, the first two slots areoccupied, so we need to make a third probe ...

Dan SuthersLast modified: Sat Sep 12 02:51:18 HST 2020Images are from the instructor's material for Cormen et al. Introduction to Algorithms, ThirdEdition.